Day by day, the evidence is mounting that Facebook is bad for society. Last week Channel 4 News in London tracked down Black Americans in Wisconsin who were targeted by President Trump’s 2016 campaign with negative advertising about Hillary Clinton—“deterrence” operations to suppress their vote.

A few weeks ago, meanwhile, I was included in a discussion organized by the Computer History Museum, called Decoding the Election. A fellow panelist, Hillary Clinton’s former campaign manager Robby Mook, described how Facebook worked closely with the Trump campaign. Mook refused to have Facebook staff embedded inside Clinton’s campaign because it did not seem ethical, while Trump’s team welcomed the opportunity to have an insider turn the knobs on the social network’s targeted advertising.

Taken together, these two pieces of information are damning for the future of American democracy; Trump’s team openly marked 3.5 million Black Americans for deterrence in their data set, while Facebook’s own staff aided voter suppression efforts. As Siva Vaidhyanathan, the author of Anti-Social Media, has said for years: “The problem with Facebook is Facebook.”

While research and reports from academics, civil society, and the media have long made these claims, regulation has not yet come to pass. But at the end of September, Facebook’s former director of monetization, Tim Kendall, gave testimony before Congress that suggested a new way to look at the site’s deleterious effects on democracy. He outlined Facebook’s twin objectives: making itself profitable and trying to control a growing mess of misinformation and conspiracy. Kendall compared social media to the tobacco industry. Both have focused on increasing the capacity for addiction. “Allowing for misinformation, conspiracy theories, and fake news to flourish were like Big Tobacco’s bronchodilators, which allowed the cigarette smoke to cover more surface area of the lungs,” he said.

The comparison is more than metaphorical. It’s a framework for thinking about how public opinion needs to shift so that the true costs of misinformation can be measured and policy can be changed.

Personal choices, public dangers

It might seem inevitable today, but regulating the tobacco industry was not an obvious choice to policymakers in the 1980s and 1990s, when they struggled with the notion that it was an individual’s choice to smoke. Instead, a broad public campaign to address the dangers of secondhand smoke is what finally broke the industry’s heavy reliance on the myth of smoking as a personal freedom. It wasn’t enough to suggest that smoking causes lung disease and cancer, because those were personal ailments—an individual’s choice. But secondhand smoke? That showed how those individual choices could harm other people.

Epidemiologists have long studied the ways in which smoking endangers public health, and detailed the increased costs from smoking cessation programs, public education, and enforcement of smoke-free spaces. To achieve policy change, researchers and advocates had to demonstrate that the cost of doing nothing was quantifiable in lost productivity, sick time, educational programs, supplementary insurance, and even hard infrastructure expenses such as ventilation and alarm systems. If these externalities hadn’t been acknowledged, perhaps we’d still be coughing in smoke-filled workplaces, planes, and restaurants.

And, like secondhand smoke, misinformation damages the quality of public life. Every conspiracy theory, every propaganda or disinformation campaign, affects people—and the expense of not responding can grow exponentially over time. Since the 2016 US election, newsrooms, technology companies, civil society organizations, politicians, educators, and researchers have been working to quarantine the viral spread of misinformation. The true costs have been passed on to them, and to the everyday folks who rely on social media to get news and information.

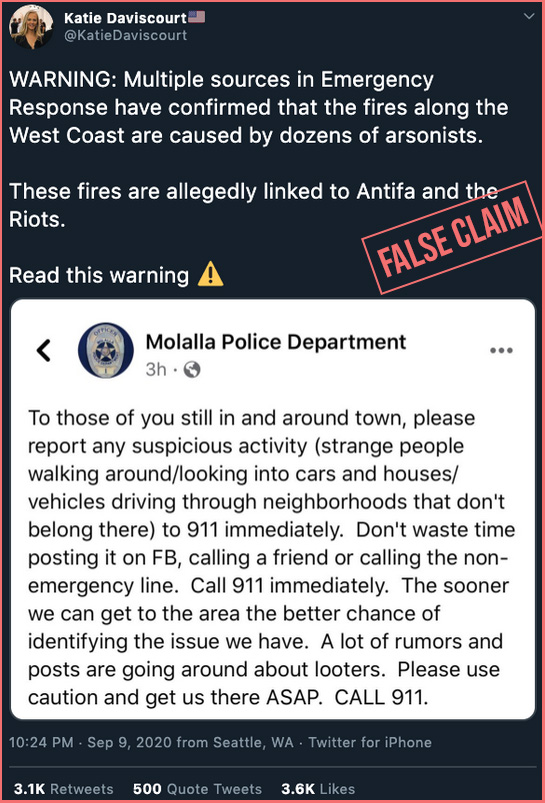

Take, for example, the recent falsehood that antifa activists are lighting the wildfires on the West Coast. This began with a small local rumor repeated by a police captain during a public meeting on Zoom. That rumor then began to spread through conspiracy networks on the web and social media. It reached critical mass days later after several right-wing influencers and blogs picked up the story. From there, different forms of media manipulation drove the narrative, including an antifa parody account claiming responsibility for the fires. Law enforcement had to correct the record and ask folks to stop calling in reports about antifa. By then, millions of people had been exposed to the misinformation, and several dozen newsrooms had had to debunk the story.

The costs are very real. In Oregon, fears about “antifa” are emboldening militia groups and others to set up identity checkpoints, and some of these vigilantes are using Facebook and Twitter as infrastructure to track those who they deem suspicious.

Online deception is now a multimillion-dollar global industry, and the emerging economy of misinformation is growing quickly. Silicon Valley corporations are largely profiting from it, while key political and social institutions are struggling to win back the public’s trust. If we aren’t prepared to confront the direct costs to democracy, understanding who pays what price for unchecked misinformation is one way to increase accountability.

Combating smoking required a focus on how it diminished the quality of life for nonsmokers, and a decision to tax the tobacco industry to raise the cost of doing business.

Now, I am not suggesting placing a tax on misinformation, which would have the otherwise unintended effect of sanctioning its proliferation. Taxing tobacco has stopped some from taking up the habit, but it has not prevented the public health risk. Only limiting the places people can smoke in public did that. Instead, technology companies must address the negative externalities of unchecked conspiracy theories and misinformation and redesign their products so that this content reaches fewer people. That is in their power, and choosing not to do so is a personal choice that their leaders make.

from MIT Technology Review https://ift.tt/3lrf1Jx

via A.I .Kung Fu

No comments:

Post a Comment